Greetings, fellow digital scholars!

Please allow me to introduce myself. My name is Jose Tomas Atria, and I am an intern at the Digital Social Sciences Center for the 2014/2015 academic year. I am a PhD student at the Sociology Department at Columbia, and my dissertation work consists in the exploitation of a massive digitized and marked-up corpus of text in order to look at the micro-processes that underlie “modernization”, or what we sociologists know as the passage from “community” (gemeinschaft) to “society” (gesselschaft).

My work at the DSSC will consist in making the data and results of my research available to other researchers and the general public through (hopefully) accessible interfaces for data exploration and representation. More on this below. In addition, my project relies on a number of tools for the processing of XML data, large scale unstructured information management, and statistical and geographic data analysis, and one of my main interests as a DSSC intern is to share my experience with these tools to the digital scholarship community at Columbia.

The Corpus

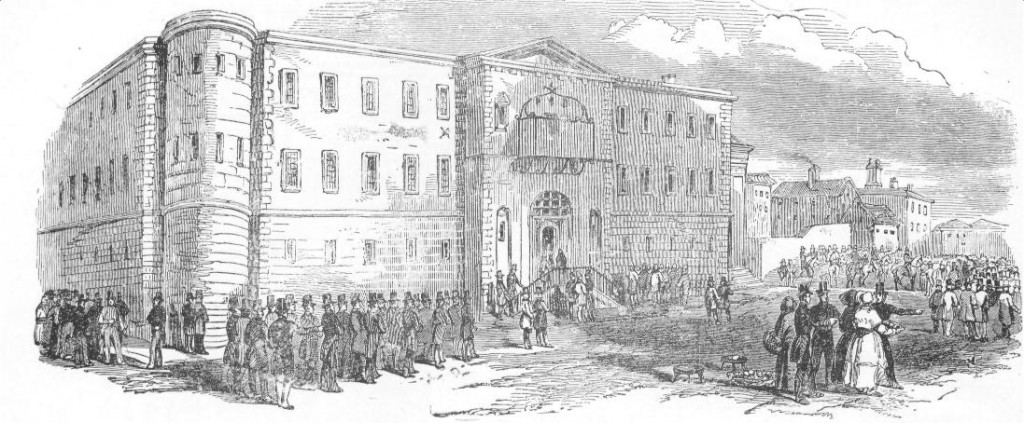

The Old Bailey Online is a digital archive of encoded transcriptions of the Proceedings of the Old Bailey, a serialized publication of transcriptions of criminal trials from the principal criminal court for the counties of Middlesex and London from April 29th 1674 to April 4th 1913. The Old Bailey Court was a seasonal court, that operated in more or less 10 sessions each year, yielding a total for the archive of 2,163 “Sessions Papers”, for a period of 238 years.

Each Sessions Paper contains three types of sections: a Front-matter, one or more Trial Accounts, and a final Summaries section that compiles the verdicts and punishments for each session of the court. The Front-matter includes general information for the session: the list of court officials, the person responsible for the transcription of the trials and publication of the paper, etc. The trials include a description of the crimes tried, information about defendants, victims and witnesses, as well as (in most cases) transcriptions of the testimonies. The summaries include a list of all the sentences and punishments metered out by the court.

The number of trial accounts per session varies from 1 to 481 for the longest session. In total, there are 197,752 trial accounts in the archive, 211,203 criminal offence descriptions and 1,212,456 personal names. In addition, other text entities are annotated in the corpus, including over 70,000 place names, and dates, occupations and other personal labels, etc.

The Tools of the Trade

In order to wrestle the beast that is the Old Bailey Corpus, there are basically three families of tasks that need to be carried out, for which I’m using different sets of technologies. The first is processing of the XML data that the corpus is distributed in. The second is the transformation of the unstructured textual data into data structures amenable to analysis. The third is the actual analysis of the data contained in the corpus. I will describe these tasks and the tools I’m using in very general terms.

XML processing for this project involves basically two distinct tasks: Exploratory analysis of the corpus, and the construction of input/output layers to read and write XML. For the first, I’m relying on XQuery, a standardized language that allows querying of XML documents as if they were a database, exploiting the tree-based structure of XML files. For the second, the general approach is the construction of SAX parsers, that treat the XML data as a stream.

The main processing workhorse in this project, that turns the unstructured text into data and does most of the heavy duty analysis tasks (like tokenization of text, disambiguation of personal names, etc.) is Apache UIMA, a system for the management of unstructured data. UIMA is incredibly powerful (it almost feels like overkill for this project!), and one of its main advantages is its modular design, meaning that I can just plug in any analysis component that I want to use on the corpus with minimal work on my part.

Finally the actual analysis of the statistical and geographic data produced by the previous tasks will be carried out in R, and in a GIS application that I have not yet decided on, but probably QGIS, now that it is available for use at the DSSC computers.

The Products

The main product that should result from this internship, is a set of tools that would allow a lay user to explore and interact with the information contained in the Old Bailey corpus. I have not yet decided what the “front-end” will look like yet, but I expect it to be a website where you can search and sort results by geographic and temporal location and pull in information from other related archives (something I will discuss in a future blog post).

In order to achieve that, the first step is to collect and clean the information contained in the archive in order to add other “real-world” data to it. The best example of this, and what I am working on right now, is turning place names into geographic entities. This implies collecting all “place name” annotations from the corpus, cleaning and normalizing them, figuring out what type of place they correspond to, using this information to look for other instances of the same locations, or for locations that are not marked up in the text, and finally querying some external resource that will match the named location to geographic information.

A somewhat similar process will have to be carried out for the dates and other marked-up entities, but most important of all, for the personal names in the archive that need to be disambiguated (e.g., determine if the “John Smith” from trial t18650922-1 is the same person as the “John Smith” from trial t1874762-56). All of these problems are non-trivial, but feasible.

After this process of “data marshalling”, the second step is to add relevant information that may be available about these entities from other resources. For example, if we find a person named Charles Johnson, male, age 34 in 1825, from Manchester, occupation carpenter, etc. we can query a people search database (like the one provided by genealogy sites) in order to find more data about this person and add references to these resources in our application.

Finally, we can display all of the information that we have collected about persons and places in a way in which the relationships are easy to observe, for example mapping locations and drawing connections between them if they have relationships to the same individual or the same crime.

If all goes well, this would allow other people to explore the Old Bailey Corpus in order to take advantage of this enormous corpus of English text about the life of everyday citizens in a period of profound social transformation. I know that this is an ambitious project, but I am very excited to work on it, and to share my work. Stay tuned for further updates!