This is Part 2 of a post about the perceptual bases for virtual reality. Part 1 deals with the perceptual cues related to the spatial perception of audio.

The chief goal of the most recent virtual reality hardware is to simulate depth perception in the viewer. Depth perception in humans arises when the brain reconciles the images from each eye, which differ slightly as a result of separation of the eyes in space. VR headsets position either a real or virtual (a single LCD panel showing a split screen) display on front of each eye. Software sends to the headset a pair of views onto the same 3D scene that are rendered from the perspective of two cameras in the virtual spaces, separated by distance between the user’s eyes. Accurate measurement and propagation of this inter-pupilliary distance (IPD) is important for effective immersion. The optics inside the Oculus Rift, for instance, are designed to tolerate software changes to the effective IPD within an certain operational range without requiring physical calibration. All these factors taken into consideration, when the user allows themselves to focus on a point beyond the surface of the headset display, they will hopefully experience a perceptually fused view of the scene with the appropriate sense of depth that arises from stereopsis.

However, stereoscopic cues are not the only perceptual cues that contribute to a sense of depth perception. For example, the widely-understood motion parallax effect is a purely monocular cue for depth perception: as we move our head, we expect objects closer to us to seem to move faster than those that are further away. Many of these cues are experiential truisms: objects farther away seem smaller, opaque objects occlude, and so on. Father Ted explains it best to his perenially hapless colleague Father Dougal in this short clip.

Others are less obvious, though well-known to 2D artists, like the effect that texture and shading has on depth perception. Nevertheless, each one of these cues needs to be activated by a convincing VR rendering, and implemented in either the client application code or the helper libraries provided by the device vendor (for instance, the Oculus Rift SDK). Here, I discuss three additional contingencies that impact the sense of VR immersion that go beyond typical depth-perception cues, to show the importance of carefully understanding human perception in order to produce convincing virtual worlds.

Barrel distortion

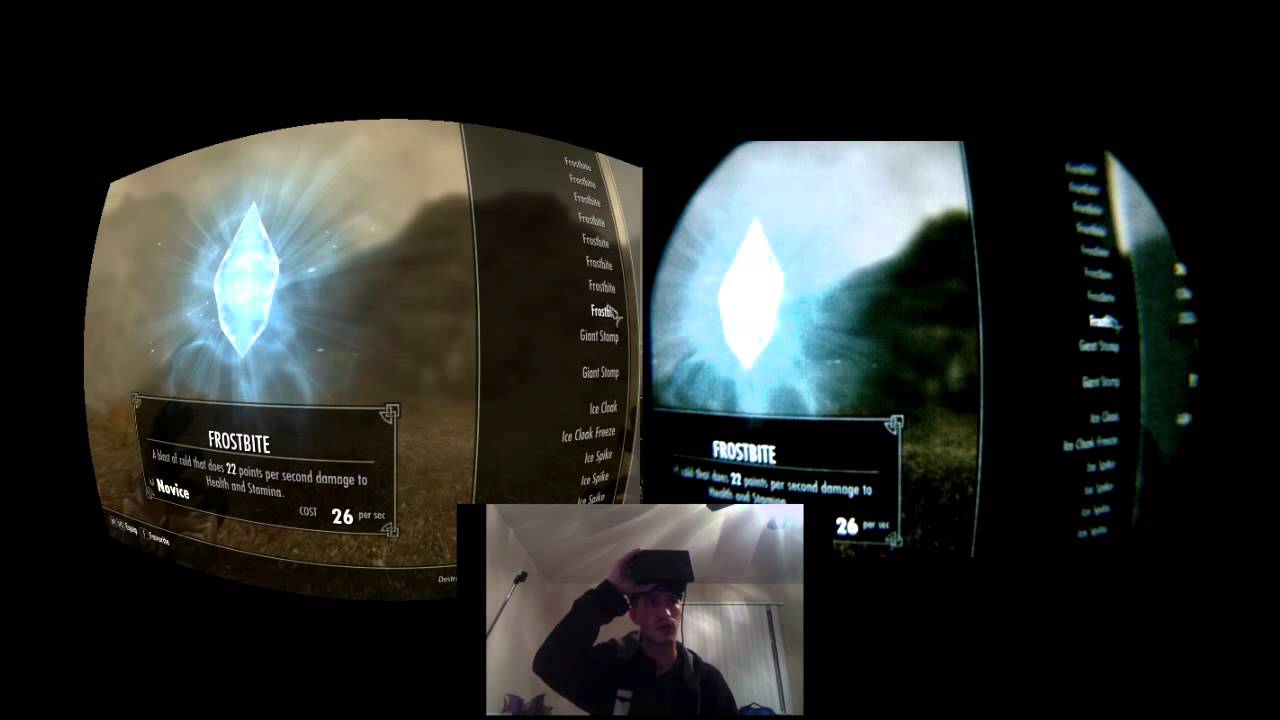

As this photograph, taken from the perspective of the user of an Oculus Rift, shows, the image rendered to each eye is radially distorted.

This kind of bulging distortion is known as barrel distortion. It is intentionally applied (using a special shader) by either client software or the vendor SDK to increase the effective field-of-view (FOV) of the user. The lenses used in the Oculus Rift correct for this distortion. The net result is an effective FOV of about 110 degrees in the case of the Oculus Rift DK1. This approaches the effective stereoscopic FOV for humans, which is between 114 and 130 degrees. Providing visual stimuli in our remaining visual field (our peripheral vision) is important for the perception of immersion, so other VR vendors are working on solutions that increase the effective FOV. One solution is to provide high-resolution display panels which are either curved or tilted in such a way as to encompass more of the real FOV of the user (e.g. StarVR). Another solution is to exploit Fresnel lenses (e.g. Weareality Sky), which can provide an effective FOV larger than regular lenses in a more compact package that is suitable for use in conjunction with a smartphone. Both of these methods have drawbacks: the additional cost of larger panels increases the total cost of the ‘wrap-around method’, while Fresnel lenses produce ‘milky’ images and their optical effects are more difficult to model in software than those of regular lenses.

‘Smoothness’

An exceptionally important factor in the perception of immersion in virtual reality is the sense of smoothness of scene updates in response to both user movement in the real world, and avatar movements in the virtual world. Perhaps the most bottleneck in this process to tackle is the rendering pipeline. For this reason, high-end gaming setups are the norm for the recommended system specifications for virtual reality. Builds in excess of 8GB of system RAM, a processor beating at least an Intel Core i5, and mid- to high-range PCIe graphics cards with at least 4GB of VRAM on-board are de rigeur. NVidia have partnered with component and system manufacturers to develop a commerce-led set of informal standards known as ‘VR Ready’.

Even if the rendering pipeline is able to provide frames to the display at a rate and reliability sufficient for the perception of fluid motion, the user motion detection subsystem must also be able to provide feedback to the game at a sufficiently high rate, so that motions in the real world can be translated to motions in the virtual scene in good time. The Oculus Rift has an innovative and very high resolution head-tracking system that fuses accelerometer, gyrometer, and magnetometer data with computer vision data from a head-tracking camera that infers the position of an array of infrared markers in real space. Interestingly, even very smooth motions in the virtual world can induce nausea and break the perception of immersion if those motions cannot be reconciled with normal human behavior. So, for instance, in cutscene animations, care must be taken not to move the virtual viewpoint in ways which do not correspond with the constraints of human body motion. For example, rotating the viewpoint around the axis of the human neck in excess of 360 degrees entails disorientation and confusion.

Contextual depth cues can remedy confounding aspects of common game mechanics

Apart from those aspects of rendering that are application-invariant, VR game programming poses special problems to the maintenance of user immersion and comfort, because of the visual conventions of video game user interface. This excellent talk by video game developer and UI designer Riho Kroll indicates some of the solutions to potentially problematic representations of certain popular game mechanics.

Kroll gives the example of objective markers in the context of a first-person game that are designed to guide the player to a target location on the map corresponding to the current game objective. Normally, objective markers are scale-invariant and unshaded, and therefore lack some of the important cues that allows the player to locate them in the virtual z-plane. Furthermore, objective markers tend to be excluded from occlusion reckonings. The consequence is that if the player’s view of the spatial context of an objective marker is completely occluded by another game object, almost all of the depth-perception cues for the location of the marker are unavailable. Kroll describes an inelegant but well-implemented solution: during similar conditions of extreme occlusion, an orthogonal grid describing the z-plane is blend-superimposed over the viewport. This orthogonal grid recedes in to the distance, behaving as expected according to the conventions of perspective and thereby providing a crucial and sufficient depth-perception cue in otherwise adversarial circumstances.